Rich 3D Perception

Research Vision

In this line of work, we are conducting research on robotic spatial perception: interpreting and making use of sensor data about the shape of the environment; for example, for creating maps and localising in them. In contrast to purely geometric perception, we are particularly interested in enriching the robot’s environment model with additional data; for example, information about changes or movements in the environment, the materials that surfaces are made of, or other semantic information that represents the robot’s understanding of what it sees. In addition, we work on methods and representions for the geometric 3D perception that is at the foundation of the robot perception pipeline.

Notable Results

3D-NDT for mapping and navigation

We have a history working with the 3D-NDT representation for geometric mapping, and localisation. We have demonstrated its effectiveness in field environments as well as structured environments that pose challenges by being highly dynamic and self-similar. One notable recent result is an evaluation on a challenging set of benchmark data sets, where we compare 3D-NDT scan matching methods to efficient baseline implementations of the ICP algorithm for more than 40 000 situations, demonstrating that NDT methods provide good performance in a wide range of scenarios; especially when faced with scan data that has weak mutual geometric structure.

SDF mapping

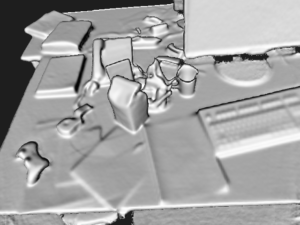

3D reconstruction using the SDF-Tracker algorithm, estimating camera pose and fusing depth images into a dense 3D model on the CPU (code)

3D reconstruction using the SDF-Tracker algorithm, estimating camera pose and fusing depth images into a dense 3D model on the CPU (code)

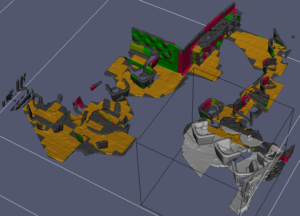

Mapping a large environment by shifting the reconstruction volume and encoding exiting voxels as their eigen-shapes descriptors.

Mapping a large environment by shifting the reconstruction volume and encoding exiting voxels as their eigen-shapes descriptors.